Introduction

Understandably customers want to know how much Snowflake costs and like many computing related questions the initial answer is “it depends”. There are no fixed costs with Snowflake as we use a consumption-based model but the Snowflake architecture has economy built-in as part of our design philosophy. Our goal is to make sure you only pay for what you use and do not waste money on idle or unnecessary resources.

Storage

For storage the cost per terabyte is near enough the same as that for the underlying cloud platform native storage, however since we compress all data ingested into Snowflake the actual cost is reduced by the average compression ratio. As this inevitably varies depending on the nature of the source data the best guideline we can use is somewhere between 3-5 times. As an example a customer quoted “we tripled the amount of data we’re storing, for about half the cost” when comparing with a cloud vendor’s database. Snowflake does not charge storage costs for results caches which retain all query results for up to 24 hours nor do we charge for the Virtual Warehouse storage caches.

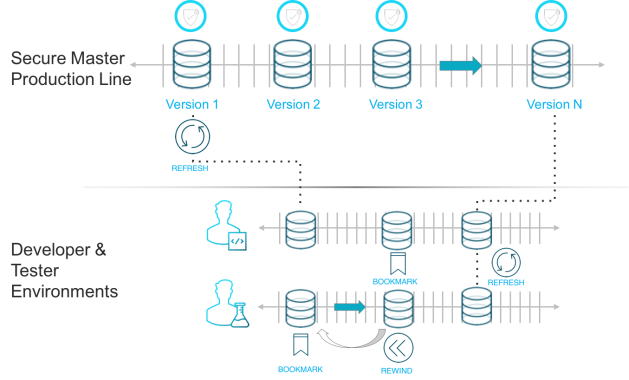

Another method that Snowflake uses to reduce storage cost is our zero copy cloning. With Snowflake you can clone databases, schemas or tables with almost no storage overhead. These clones are created within seconds and mean you can have as many development or test environments with minimal storage costs. Snowflake cloning is also be used for point in time reporting environments and backups.

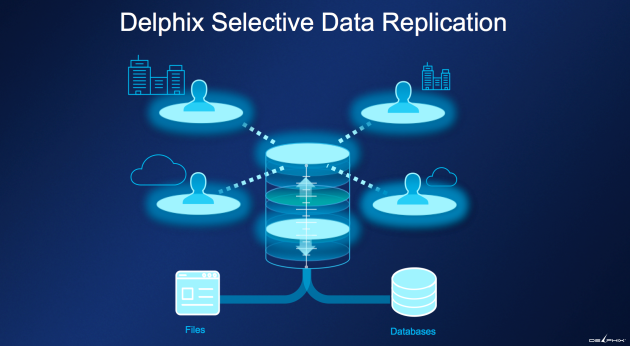

Snowflake data sharing allows you to share any portion of data with another Snowflake user or third party. Again there is no copying or extracts of this data to generate or maintain or consume storage, Snowflake data sharing shares the data blocks

Storage costs are particularly important because they are a constant cost. You pay for storage 24×7 with the only option to trim the costs being to compress data, delete data or find a better rate.

Compute

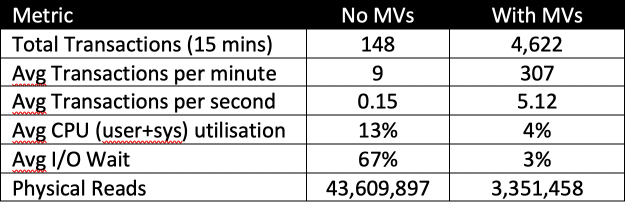

Next is compute costs and this is where with Snowflake you have more control over costs. Snowflake compute costs are paid for using credits. We also have per second billing so every saved second counts. Our smallest Virtual Data Warehouse (VDW) consumes a single credit per hour, each next sized VDW from XS to 4XL consumes credits at double the rate of the smaller size. Since we tend to get linear scalability the cost for running a workload on the next sized VDW is the same as that for the previous sized smaller VDW as although the consumption rate is doubled the run time is halved. Snowflake VDWs can be quiesced or suspended at will either explicitly or via policy. When a VDW is not running it consumes no credits. A suspended VDW will resume on query within seconds, again policy permitting (99% of VDWs have this enabled). The ability to start or resume or resize a VDW within seconds gives customers confidence to suspend VDWs and essentially trim their compute costs by trimming to the minimum run time.

The ability to trim back compute whether scaling down a VDW or ‘scaling-in’ nodes in a VDW cluster is all aimed at reducing VDW run time to reduce or terminate compute consumption.

Administration

Finally Snowflake has near zero administration. With Snowflake there is no installation, no upgrades, no patches, no indexes, no partitions, no table statistics, no need to rebalance, re-stripe or replicate data and almost no tuning. This level of automation substantially reduces administration costs.

In summary

- Storage costs lower than native cloud storage due to compression

- No storage costs for on disk caches

- Near zero storage overhead for data cloning or data sharing

- Compute costs minimised by scaling down, scaling in and suspend operations on VDWs

- Compute costs minimised by efficient query execution plans, requiring zero tuning, and results caches

- Near zero administration

At the end of the day it comes down to product philosophy. Snowflake wants to help organisations become data driven by providing a cloud native database which can support all data warehouse and data lake workloads. We want you to spend your time analysing data not cloud vendor invoices.

Disclaimer: The opinions expressed in my articles are my own, and will not necessarily reflect those of my employer (past or present).